Databricks

Available only in PyCharm Professional: download to try or compare editions

The Databricks plugin allows you to connect to a remote Databricks workspace right from the IDE.

With the Databricks plugin you can:

Run Jupyter Notebooks and Python files as Databricks workflows

Synchronize your project files with a folder on a Databricks cluster

Make sure that the Databricks plugin is installed and enabled.

Make sure you have a Databricks account. To use your Databricks account on AWS, you need an existing AWS account.

note

When you install the Databricks plugin, the Big Data Tools Core auxiliary plugin is also installed automatically.

To create a new Databricks connection:

Go to View | Tool windows | Databricks to open the Databricks tool window.

Click

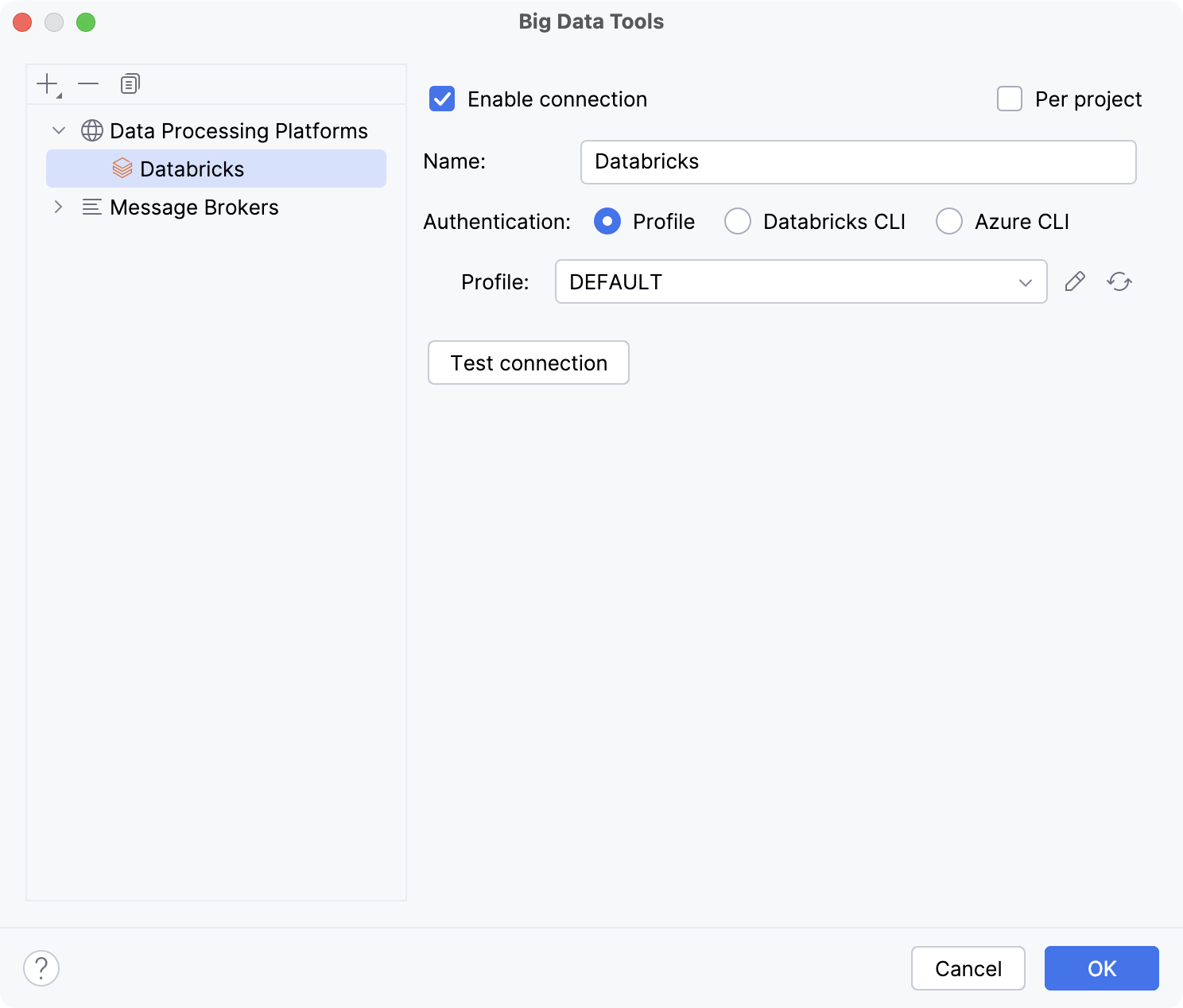

New connection. The Big Data Tools dialog opens.

You can connect to your Databricks workspace using one of the following options:

In the Name field, enter the name of the connection to distinguish it between other connections.

If you have a .databrickscfg file in your user root directory, it will be used automatically for the authentication via profile. You can select the profile from the drop-down menu if you have several profiles.

If you want to edit the .databrickscfg file, click

Open .databrickscfg File to open the file in the editor.

Click

Reload .databrickscfg File to reload the changed file.

Reload .databrickscfg File to reload the changed file.Click Test connection to ensure that all configuration parameters are correct.

Click OK to save changes.

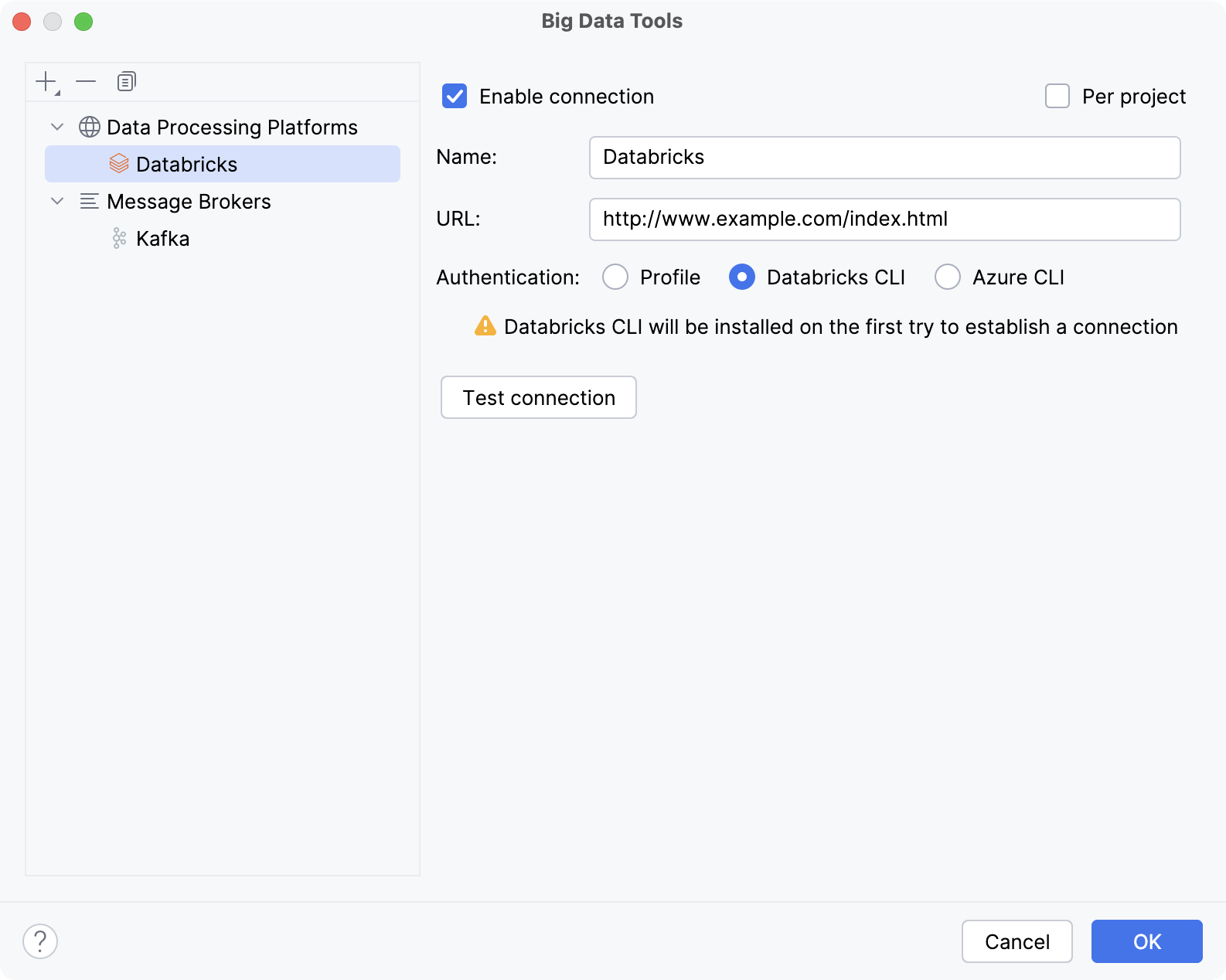

In the Name field, enter the name of the connection to distinguish it between other connections.

In the URL field, enter the URL of your Databricks workspace.

If you do not have Databricks CLI installed, PyCharm will install it on the first try to establish a connection.

Click Test connection to ensure that all configuration parameters are correct.

Click OK to save changes.

In the Name field, enter the name of the connection to distinguish it between other connections.

In the URL field, enter the URL of your Databricks workspace.

If you do not have Azure CLI installed, click the Install CLI link and follow the installation instructions on the website.

Click Test connection to ensure that all configuration parameters are correct.

Click OK to save changes.

Additionally, you can configure the following settings:

Enable connection: deselect if you want to disable this connection. By default, the newly created connections are enabled.

Per project: select to enable these connection settings only for the current project. Deselect it if you want this connection to be visible in other projects.

When you run a workflow on a Databricks cluster, your series of tasks or operations are executed in a specific sequence across multiple machines in the cluster. Each task in your workflow might be dependent on the output of previous tasks.

Open a .py or .ipynb file in the editor.

Do one of the following:

Click Run as Workflow in the Databricks tool window.

Right-click in the editor and select Run as Workflow from the context menu.

When you run a job or a notebook on a Databricks cluster, your code is sent to the cluster and is executed on multiple machines within the cluster. This method of execution contributes to faster processing and analysis, especially beneficial when dealing with large amounts of data.

Open a .py file in the editor.

Do one of the following:

Click Run on Cluster in the Databricks tool window.

Right-click in the editor and select Run on Cluster from the context menu.

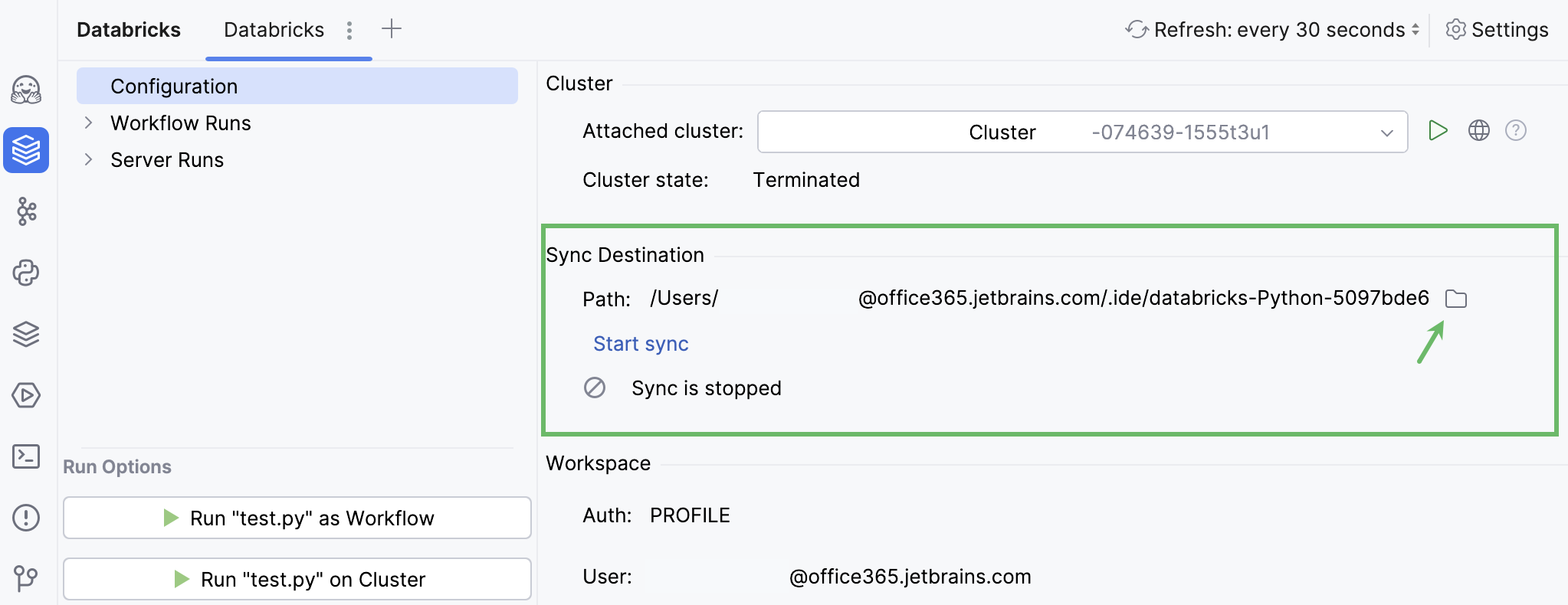

You can synchronize your project files with a Databricks cluster:

Specify a path to the folder on the Databricks cluster that you want to synchronize your files with.

Click Start Sync.

Thanks for your feedback!