Testing languages

Testing is an essential part of language designer's work. To be of any good MPS has to provide testing facilities both for BaseLanguage code and for languages. While the jetbrains.mps.baselanguage.unitTest language enables JUnit-like unit tests to test BaseLanguage code, the Language test language jetbrains.mps.lang.test provides a useful interface for creating language tests.

tip

To minimize impact of test assertions on the test code, the Language test language describes the testing aspects through annotations (in a similar way that the generator language annotates template code with generator macros).

Different aspects of language definitions are tested with different means:

Language definition aspects | The way to test |

|---|---|

Intentions Actions Side-transforms Editor ActionMaps KeyMaps | Use the jetbrains.mps.lang.test language to create EditorTestCases. You set the stage by providing an initial piece of code, define a set of editing actions to perform against the initial code and also provide an expected outcome as another piece of code. Any differences between the expected and real output of the test will be reported as errors. For more information, refer to the Editor Tests section. |

Constraints Scopes Type-system Dataflow | Use the jetbrains.mps.lang.test language to create NodesTestCases. In these test cases write snippets of "correct" code and ensure no error or warning is reported on them. Similarly, write "invalid" pieces of code and assert that an error or a warning is reported in the correct node. For more information, refer to the Nodes Tests section. |

Generator TextGen | Use the language to create GeneratorTests. These let you transform test models and check whether the generated code matches the expected outcome. For more information, refer to the Generator Tests section. There is currently no built-in testing facility for these aspects. There are a few practices that have worked for us over time:

|

Migrations | Use the jetbrains.mps.lang.test language to create MigrationTestCases. In these test cases write pieces of code to run migration on them. For more information, refer to Migration Tests section. |

There are two options to add test models into your projects.

This is easier to setup, but can only contain tests that do not need to run in a newly started MPS instance. So typically can hold plain baselanguage unit tests. To create the Test aspect, right-click the language node and choose chose New->Test Aspect.

Now you can start creating unit tests in the Test aspect.

Right-clicking on the Test aspect will give you the option to run all tests. The test report will then show up in a Run panel at the bottom of the screen.

This option gives you more flexibility. Create a test model, either in a new or an existing solution. Make sure the model's stereotype is set to tests.

Open the model's properties and add the jetbrains.mps.baselanguage.unitTest language in order to be able to create unit tests. Add the jetbrains.mps.lang.test language in order to create language (node) tests.

Additionally, you need to make sure the solution containing your test model has a kind set - typically choose Other, if you do not need either of the two other options (Core plugin or Editor plugin).

Right-clicking on the model allows you to create new unit or language tests. See all the root concepts that are available:

As for BaseLanguage Test Case, represents a unit test written in baseLanguage. Those are familiar with JUnit will be quickly at home.

A BTestCase has four sections - one to specify test members (fields), which are reused by test methods, one to specify initialization code, one for clean up code and finally a section for the actual test methods. The language also provides a couple of handy assertion statements, which code completion reveals.

Some tests, like for example, Node tests or Editor tests need to access the MPS project they are part of. MPS can locate the current project by inself in most cases, but an explicit project location can be provided inside a TestInfo node in the root of your test model.. This applies to all modes of executing the JUnit tests:

Running from the IDE, both in-process and out-of-process: it is assumed the project to open is the one currently open.

Running from the <launchtests> task: the project path can be specified as an additional option "project path" of the task. If left unspecified, the ${basedir} is used, which corresponds to the home directory of the current project.

For a special case where neither of the above can be used, there is a possibility to specify the project location via a system property: -Dmps.test.project.path.

When providing a custom TestInfo node, the Project path attribute in particular is worth your attention. This is where you need to provide a path to the project root, either as an absolute or relative path, or as a reference to a Path Variable defined in MPS (Project Settings -> Path Variables).

To make the path variable available in Ant scripts, define it in your build file with the mps.macro. prefix (refer to example below).

A NodesTestCase contains three sections:

The first one contains code that should be verified. The section for test methods may contain baseLanguage code that further investigates nodes specified in the first section. The utility methods section may hold reusable baseLanguage code, typically invoked from the test methods.

To test that the type system correctly calculates types and that proper errors and warnings are reported, you write a piece of code in your desired language first. Then select the nodes, that you'd like to have tested for correctness and choose the Add Node Operations Test Annotation intention.

This will annotate the code with a check attribute, which then can be made more concrete by setting a type of the check:

The for error messages option ensures that potential error messages inside the checked node get reported as test failures. So, in the given example, we are checking that there are no errors in the whole Script.

The other options allow you:

has error - ensures a particular error is reported on a node

has expected type - ensures the annotated node is expected to have the specified type

has info - ensures a particular info message is reported on a node

has type - ensures the annotated node has the specified type

has type in - ensures the annotated node has one of the specified types

has typesystem error - ensures a specified typesystem error is reported on the annotated node

has warning - ensures a particular warning is reported on a node

If, on the other hand, you want to test that a particular node is correctly reported by MPS as having an error or a warning, use the has error /has warning option.

This works for both warnings and errors. Multiple warnings and errors can be declared with a single annotation.

You can even tie the check with the rule that you expect to report the error / warning. Press when with caret at the node and pick the Specify Rule References option:

An identifier of the rule has been added. You can navigate by to the definition of the rule.

When run, the test will check that the specified rule is really the one that reports the error.

The check command offers several options to test the calculated type of a node.

Multiple expectations can be combined conveniently:

The Scope Test Annotation allows the test to verify that the scoping rules bring the correct items into the applicable scope:

The Inspector panel holds the list of expected items that must appear in the completion menu and that are valid targets for the annotated cell:

The test methods may refer to nodes in your tests through labels. You assign labels to nodes using intentions:

The labels then become available in the test methods.

The Inspector tool window provides additional options:

Can execute-in-process - allows the author of the test to stop the test execution when the whole test suite is started to be run in-process. The test suit terminates with a TestSetNotToBeExecutedInProcessException thrown. This may be useful when the test, for example, could accidentally damage the existing project by touching other nodes.

Model access model - specifies the type of access control applied to the test:

command - the test will run wrapped in a command, so read/write access tto models is granted

none - no access control applied

read - the test will run wrapped in a read action, so reading models is allowed

unset - a maintenance value needed for migration that should not be used

The labels then become available in the test methods.

Editor tests allow you to test the dynamism of the editor - actions, intentions and substitutions.

An empty editor test case needs a name, an optional description, setup the code as it should look before an editor transformation, the code after the transformation (result) and finally the actual trigger that transforms the code in the code section.

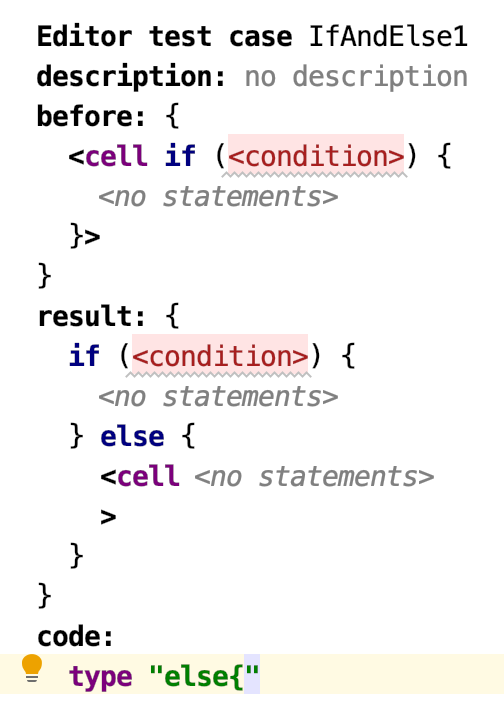

For example, a test that an IfStatement in BaseLanguage can have an "else" block added if the user types "else{" behind the closing brace would look as follows:

In the code section the jetbrains.mps.lang.test language gives you several options to invoke user-initiated actions:

type - insert some text at the current caret position

press keys - simulate pressing a key combination

invoke action - trigger an action

invoke intention - trigger an intention

invoke quick-fix XXX from YYY to the selected node - invokes a specified quick-fix named XXX to an error YYY from the intentions menu, fails if the quick-fix is not available in the menu

invoke quick-fix <the only one available> to the selected node - invokes the only quick-fix that is available in the intentions menu, fails if 0 or more than 1 quick-fixes are available

Obviously you can combine the special test commands with the plain BaseLanguage code.

tip

In order to be able to specify the desired actions and intentions, you need to import their models into the test model. Typically the jetbrains.mps.ide.editor.actions model is the most needed one when testing the editor reactions to user-generated actions.

To mark the position of the caret in the code, use the appropriate intention with the caret placed at the desired position:

The caret position can be specified in both the before and the after code:

The cell editor annotation has extra properties to fine-tune the position of the caret in the annotated editor cell. These can be set in the Inspector panel.

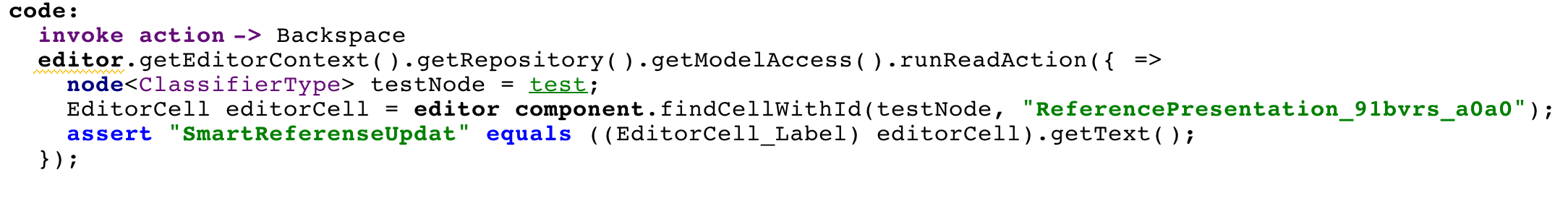

Some editor tests may wish to inspect the state of the editor more thoroughly. The editor component expression gives you access to the editor component under the caret. You can inspect its state as well as modify it, like in these samples:

The is intention applicable expression let's you test, whether a particular intention can be invoked in the given editor context:

You can also get hold of the model and project using the model and project expressions, respectively.

Two-phase deletion of nodes can be tested using the editor component expression as follows

EditorTestUtil.runWithTwoStepDeletion({ =>

invoke action -> Delete

assert true DeletionApproverUtil.isApprovedForDeletion(editor component.getEditorContext(), editor component.getSelectedNode());

assert true editor component.getDeletionApprover().isApprovedForDeletion(editor component.findNodeCell(editor component.getSelectedNode()));

invoke action -> Delete

}, true);EditorTestUtils.runWithTwoStepDeletion will create a local context with two-step deletion enabled. Remember that the user can turn two-phase deletion on and off at will, so this will ensure consistent environment for the tests. The second, boolean parameter to the method indicates whether two-phase deletion should be on or off.

DeletionApproverUtil.isApprovedForDeletion - retrieved the cell corresponding to the current node and tests is "approvedForDeletion" flag.

Alternatively use component.getDeletionApprover() to test the flag without the help of the utility class. You will have to find and provide the editor cell that should have the "approved for deletion" flag tested.

Migrations tests can be used to check that migration scripts produce expected results using specified input.

To create a migration test case you should specify its name and the migration scripts to test. In many cases it should be enough to test individual migration scripts separately, but you can safely specify more than one migration script in a single test case, if you need to test how migrations interact with one another.

Additionally, migration test cases contain nodes to be passed into the migration process and those also nodes that are expected to come out as the ouptut of the migration.

When running, migration tests behave the following way:

Input nodes are copied as roots into an empty module with single model.

Migration scripts run on that module.

Roots contained in that module after migration are compared with the expected ouput

The check() method of the concerned migration(s) is invoked to ensure that it returns an empty list of problems

To simplify the process of writing migration tests, the expected output can be generated automatically from the input nodes using the currently deployed migration scripts. To do this, use the intention called 'Generate Output from Input'.

Generators can be tested with generator tests. Their goal is to ensure that a generator, or set of generators, do their transformations as expected. Both in-process and out-of-process execution modes are supported from the IDE, as well as execution from MPS Ant build scripts. As with all tests in MPS the user specifies:

the pre-conditions in form of input models

the expected output of the generator in form of output models

the set of generators to apply to the input models in form of an explicit generator plan or, if omitted, the implicit generator plan is used.

The jetbrains.mps.lang.test.generator allows you to create GeneratorTests. The jetbrains.mps.lang.modelapi language will let you create convenient model references using the model/name of the model/ syntax.

Notice that the structure of a generator test gives you a section called Arguments, where all the models need to be specified (input, expected output and optionally also models holding the generation plans), and a section called Assertions, where the desired transformations and matching are specified.

A failure to match the generator output with the expected output is presented to the user in the test report:

The way the expected output is compared with the actual result of generation tests can be configured with Match Options.

These match options can then be associated with assertions.

Since the Project tool windows always lists root nodes ordered alphabetically, it hides the actual physical order of root nodes in the model. By default, the order of root nodes in the models matters when the models are being compared during generation tests. To make the tests pass, you can either change the Match options for your test or you can mannually re-order the root nodes in the output models to reflect the expectations you have from your generator.

To run tests in a model, just right-click the model in the Project View panel and choose Run tests:

If the model contains any of the jetbrains.mps.lang.test tests, a new instance of MPS is silently started in the background (that's why it takes quite some time to run these compared to plain baseLanguage unit tests) and the tests are executed in that new MPS instance. A new run configuration is created, which you can then re-use or customize:

The Run configurations dialog gives you options to tune the performance of tests.

Override the default settings location - specify the directory to save the caches in. By default, MPS chooses the temp directory. The directory is cleared on every run.

Execute in the same process - to speed up testing tests can be run in a so-called in-process mode. It was designed specifically for tests, which need to have an MPS instance running. (For example, for the language type-system tests MPS should safely be able to check the types of nodes on the fly.)

One way to run tests is to have a new MPS instance started in the background and run the tests in this instance. The second way, enabled by this checkbox, runs all tests in the same original MPS process, so no new instance needs to be created.

When the option Execute in the same process is set, the test is executed in the current MPS environment. This is the default for:

Node tests

Editor tests

tip

This option is not available for unit tests (BTestCases and JUnit test cases) and the tests will fail if run in the MPS process.

To run tests in the original way (in a separate process) you should uncheck this option. This is the default for:

Migration tests

Generation tests

Unit tests (BTestCases and JUnit test cases)

tip

Although the performance is so much better for in-process test execution, there are certain drawbacks in this workflow. Note, that the tests are executed in the same MPS environment that holds the project, so there is a possibility, that the code you write in your test may be potentially dangerous and sometimes cause real harm.

For example, a test, which disposes the current project, could destroy the whole project. So the user of this feature needs to be careful when writing the tests. There are certain cases when the test must not be executable in-process. In that case it is possible to switch an option in the inspector to prohibit the in-process execution for that specific test.

The test report is shown in the Run panel at the bottom of the screen:

The JUnit run configuration accepts plugins to deploy before running the tests. The user can provide a list of idea plugins to be deployed during the test execution. The before task 'Assemble Plugins' is available in the JUnit run configuration as well. It automatically builds the given plugins and copies the artifacts to the settings directory.

In order to have your generated build script offer the test target that you could use to run the tests using Ant, you need to import the jetbrains.mps.build.mps and jetbrains.mps.build.mps.tests languages into your build script, declare using the module-tests plugin and specify a test modules configuration.

By default, JUnit tests in MPS generate the test reports in the "vintage" and "jupiter" formats. In addition to that, the Open Test report format can be enabled explicitly with the create open test report option. If the option is set to true, report files named "junit-platform-events*-$BUILD_NAME$.xml" are created in the project directory.

The reports dir can be used to customize the output directory for the generated test reports/

To define a macro that Ant will pass to JUnit (e.g. for use in TestInfo roots in your tests), prefix it with mps.macro.: