Stop using LLMs for these tasks!

Discover the ins and outs of large language models (LLMs) with Dr. Jodie Burchell.

Discover the ins and outs of large language models (LLMs) with Dr. Jodie Burchell. Unravel the capabilities and pitfalls of LLMs like GPT and learn whether they align with your business needs. From language tasks to rules-based limitations, Jodie covers the critical elements of applying LLMs in a business context, the cost implications, and the technical challenges involved.

What LLMs Are

- Definition: LLMs are neural network models, specifically transformer models, capable of performing a range of natural language tasks.

- Capabilities: They excel at text generation, summarization, classification, translation, and limited problem-solving with text.

- Training Data: These models are trained on vast amounts of text data (petabytes), encoding substantial knowledge about language structure.

Limitations of LLMs

- Rule-Based Problems: LLMs struggle with tasks involving mathematical logic or rule-based reasoning.

- Hallucinations: When lacking internal knowledge, LLMs might generate plausible but incorrect answers.

- Outdated Knowledge: Due to the expense of training, models can't be frequently updated, causing their knowledge to become outdated over time.

- Resource Intensive: Running large models requires significant computing power (tens of gigabytes of GPU memory) and can be costly.

- Accurate Outputs: High accuracy often necessitates large models and extensive data, which are expensive to maintain and operate.

Challenges in Using LLMs

- Complexity in Deployment: Implementing LLMs involves complex processes, and achieving good performance "out of the box" is challenging.

- Retrieval Augmented Generation: Enhances LLMs by providing additional, specific information but adds complexity to the pipeline.

- High Salaries for Expertise: Employing skilled ML and MLOps engineers is costly, with salaries ranging from $100,000 to $150,000 per year in the U.S.

Assessing the Need for LLMs

- Non-Language Problems: If the problem isn't related to natural language, LLMs are unnecessary.

- Language Problems: Even for language-related issues, simpler models or traditional machine learning techniques might suffice.

- Alternatives: Techniques like binary/count vectorization, document embeddings (e.g., word2vec, doc2vec), and smaller, fine-tuned models like distilBERT can be effective and less resource-intensive.

Appropriate Use Cases for LLMs

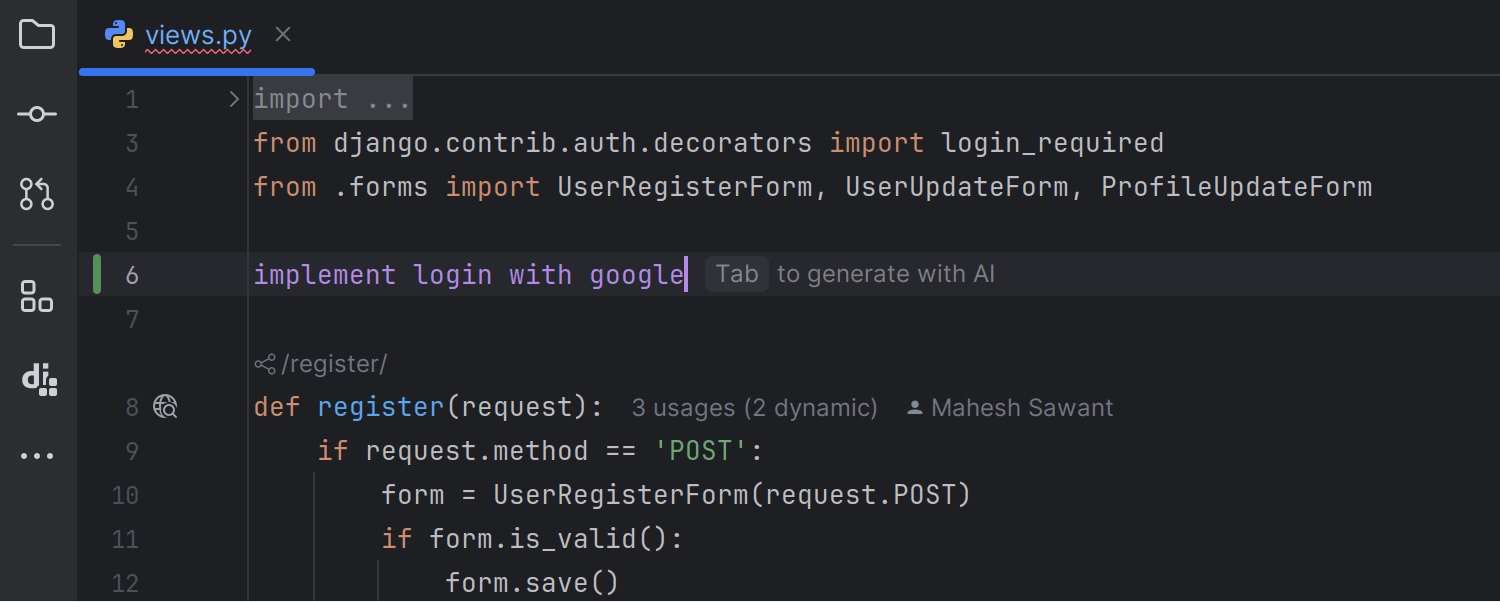

- Complex Language Tasks: LLMs are best for sophisticated tasks like text summarization, customer support interfaces, and coding assistance.

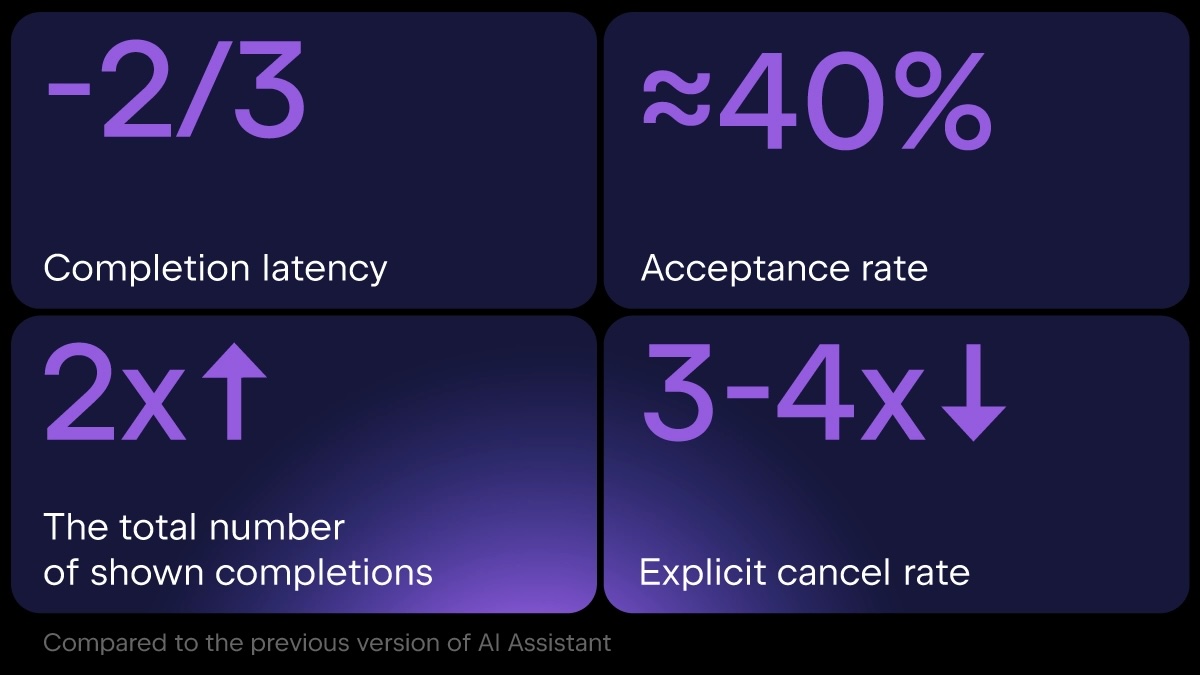

- JetBrains Examples: JetBrains employs LLMs in their AI Assistant products in Datalore Team and various IDEs to aid developers and data scientists.

Conclusion

- Balanced View: Jodie emphasizes that while LLMs have impressive capabilities, they are not a one-size-fits-all solution and should be applied to specific use cases where their strengths can be fully leveraged.